Linux LVM使用

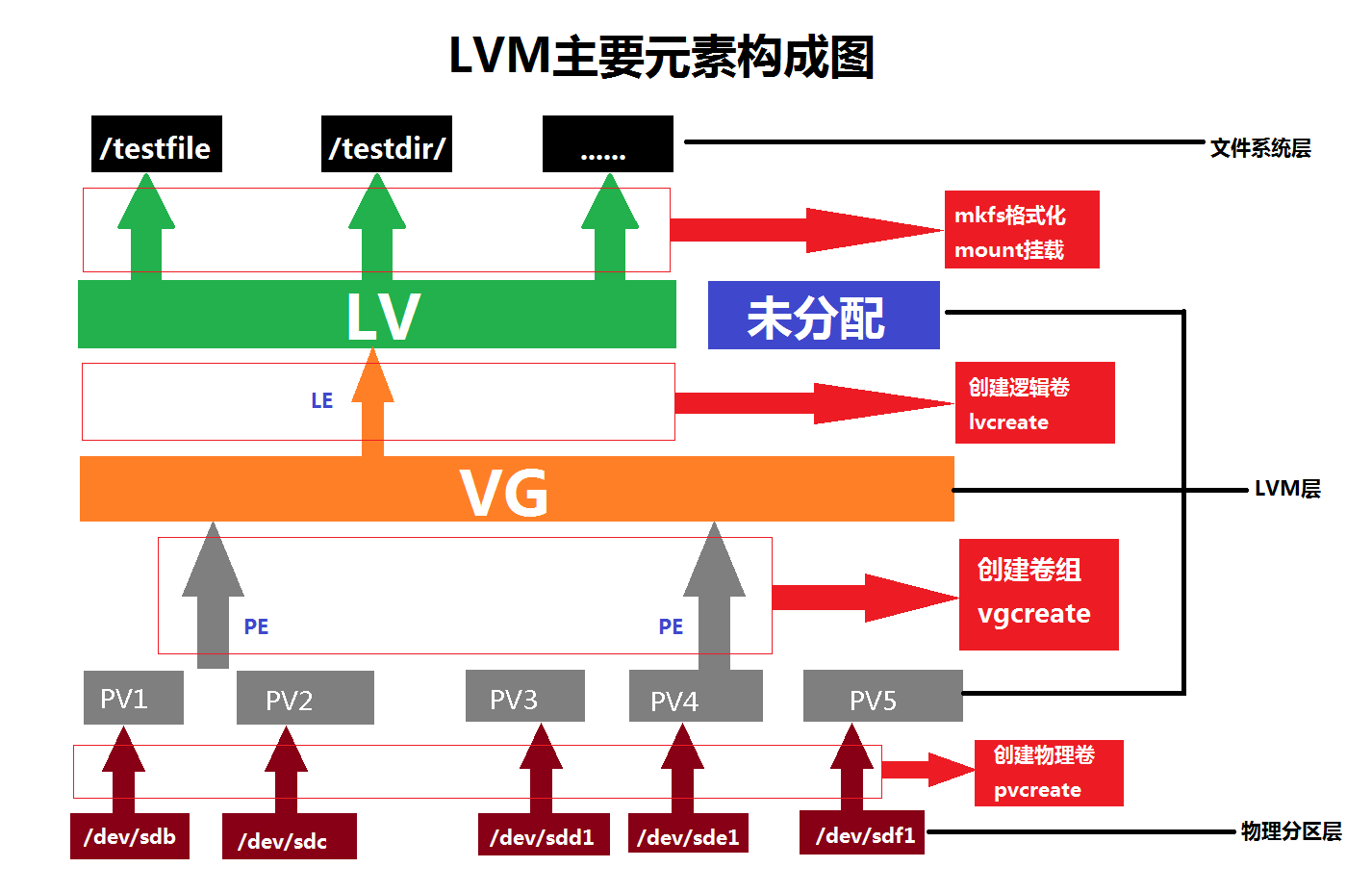

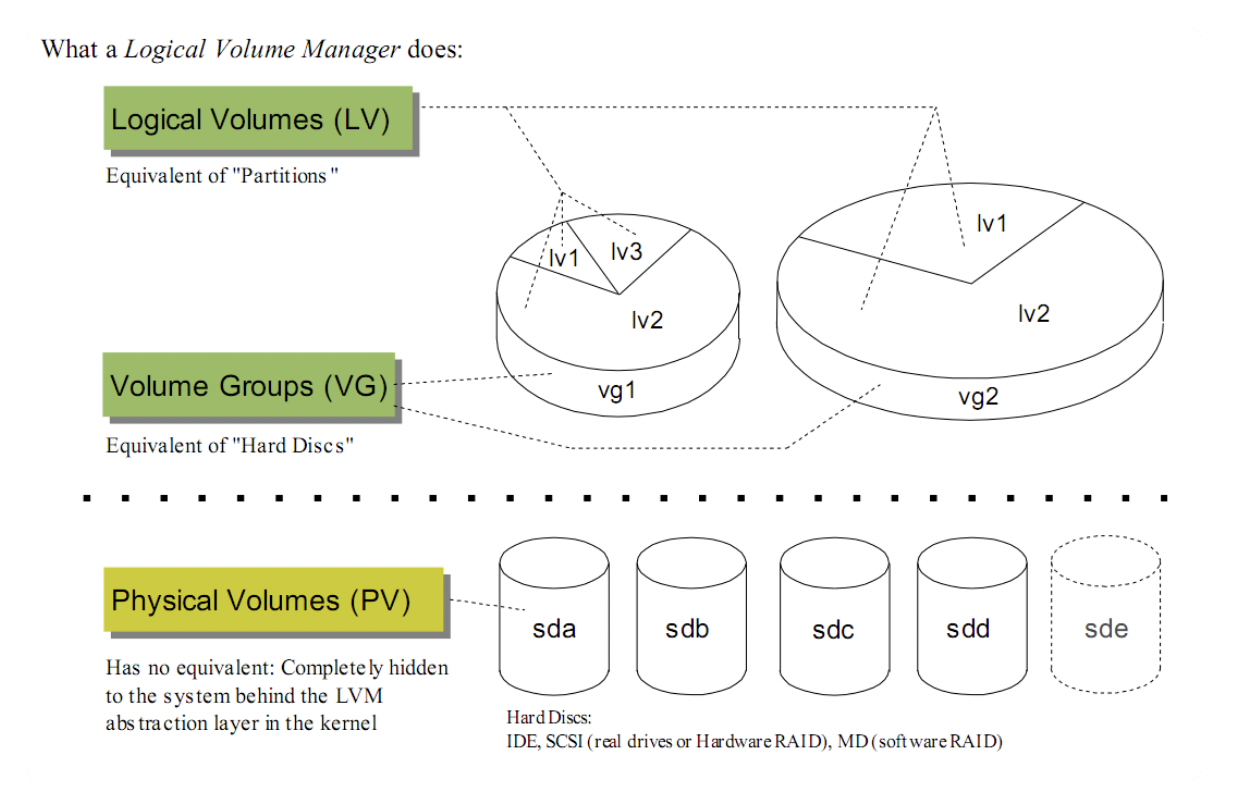

LVM是 Logical Volume Manager(逻辑卷管理)的简写, 用来解决磁盘分区大小动态分配。LVM不是软RAID(Redundant Array of Independent Disks)。

从一块硬盘到能使用LV文件系统的步骤:

硬盘—-分区(fdisk)—-PV(pvcreate)—-VG(vgcreate)—-LV(lvcreate)—-格式化(mkfs.ext4 LV为ext文件系统)—-挂载

LVM磁盘管理方式

lvreduce 缩小LV

先卸载—>然后减小逻辑边界—->最后减小物理边界—>在检测文件系统 ==谨慎用==

1 | [aliyun@uos15 15:07 /dev/disk/by-label] |

LVM 创建、扩容

1 | sudo vgcreate vg1 /dev/nvme0n1 /dev/nvme1n1 //两块物理磁盘上创建vg1 |

创建LVM

1 | function create_polarx_lvm_V62(){ |

-I 64K 值条带粒度,默认64K,mysql pagesize 16K,所以最好16K

默认创建的是 linear,一次只用一块盘,不能累加多快盘的iops能力:

1 | #lvcreate -h |

remount

正常使用中的文件系统是不能被umount的,如果需要修改mount参数的话可以考虑用mount 的 -o remount 参数

1 | [root@ky3 ~]# mount -o lazytime,remount /polarx/ //增加lazytime参数 |

remount 时要特别小心,会大量回收 slab 等导致sys CPU 100% 打挂整机,remount会导致slab回收等,请谨慎执行

1 | [2023-10-26 15:04:49][kernel][info]EXT4-fs (dm-0): re-mounted. Opts: lazytime,data=writeback,nodelalloc,barrier=0,nolazytime |

复杂版创建LVM

1 | function disk_part(){ |

LVM性能还没有做到多盘并行,也就是性能和单盘差不多,盘数多读写性能也一样

查看 lvcreate 使用的参数:

1 | #lvs -o +lv_full_name,devices,stripe_size,stripes |

==要特别注意 stripes 表示多快盘一起用,iops能力累加,但是默认 stripes 是1,也就是只用1块盘,也就是linear==

安装LVM

1 | sudo yum install lvm2 -y |

dmsetup查看LVM

管理工具dmsetup是 Device mapper in the kernel 中的一个

1 | dmsetup ls |

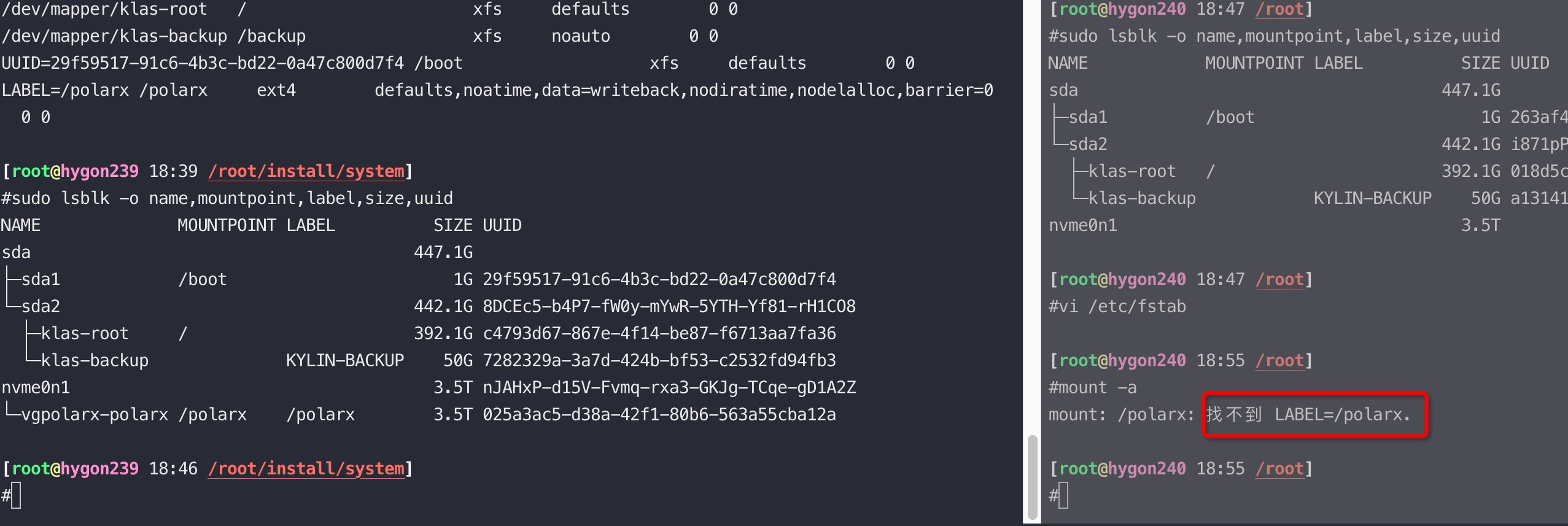

reboot 失败

在麒麟下OS reboot的时候可能因为mount: /polarx: 找不到 LABEL=/polarx. 导致OS无法启动,可以进入紧急模式,然后注释掉 /etc/fstab 中的polarx 行,再reboot

这是因为LVM的label、uuid丢失了,导致挂载失败。

查看设备的label

1 | sudo lsblk -o name,mountpoint,label,size,uuid or lsblk -f |

修复:

紧急模式下修改 /etc/fstab 去掉有问题的挂载; 修改标签

1 | #blkid //查询uuid、label |

比如,下图右边的是启动失败的

软RAID

mdadm(multiple devices admin)是一个非常有用的管理软raid的工具,可以用它来创建、管理、监控raid设备,当用mdadm来创建磁盘阵列时,可以使用整块独立的磁盘(如/dev/sdb,/dev/sdc),也可以使用特定的分区(/dev/sdb1,/dev/sdc1)

mdadm使用手册

mdadm –create device –level=Y –raid-devices=Z devices

-C | –create /dev/mdn

-l | –level 0|1|4|5

-n | –raid-devices device [..]

-x | –spare-devices device [..]

创建 -l 0表示raid0, -l 10表示raid10

1 | mdadm -C /dev/md0 -a yes -l 0 -n2 /dev/nvme{6,7}n1 //raid0 |

删除

1 | umount /md0 |

监控raid

1 | #cat /proc/mdstat |

控制刷盘速度

1 | #sysctl -a |grep raid |

nvme-cli

1 | nvme id-ns /dev/nvme1n1 -H |

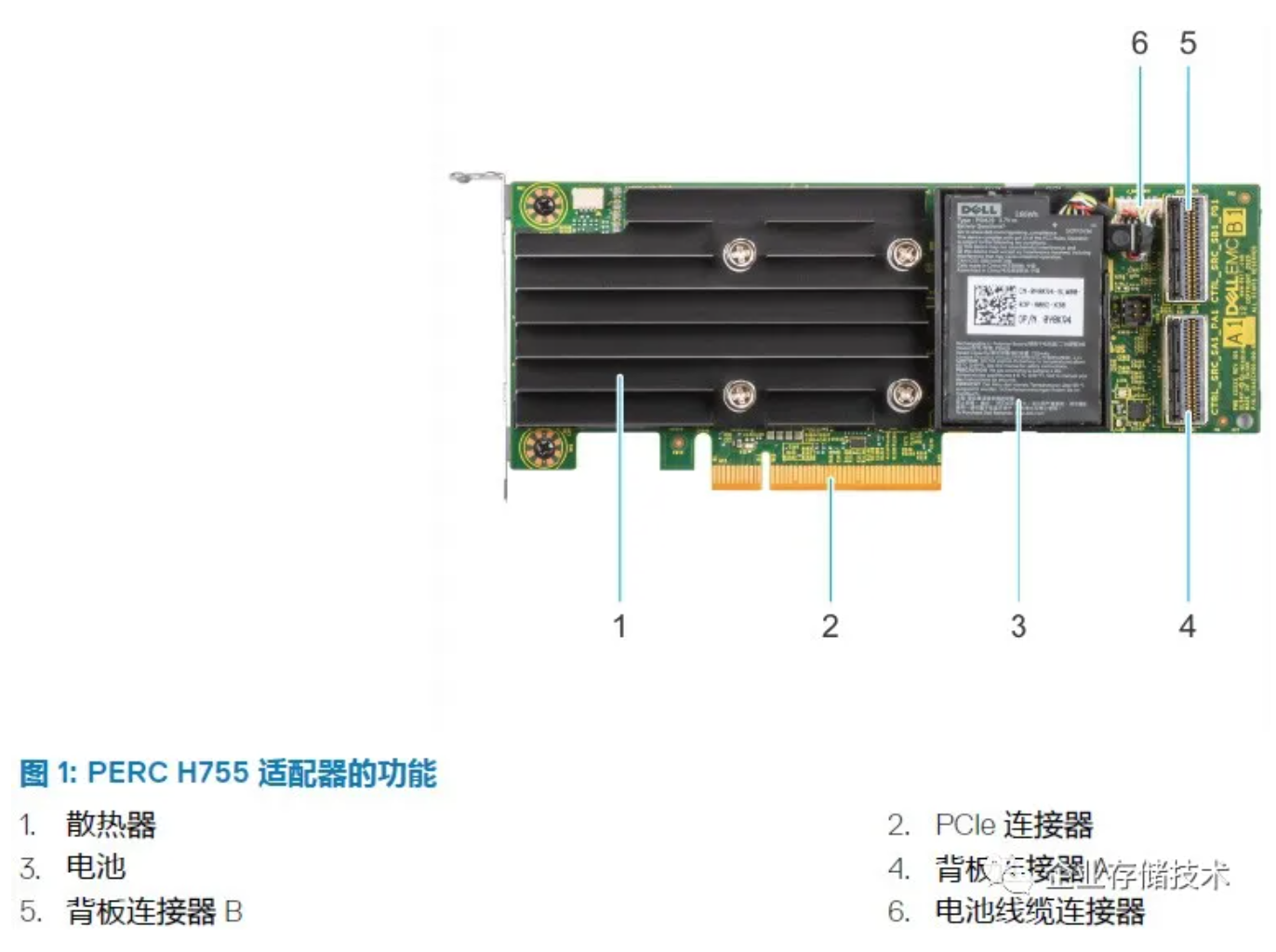

raid硬件卡

mount 参数对性能的影响

推荐mount参数:defaults,noatime,data=writeback,nodiratime,nodelalloc,barrier=0 这些和 default 0 0 的参数差别不大,但是如果加了lazytime 会在某些场景下性能很差

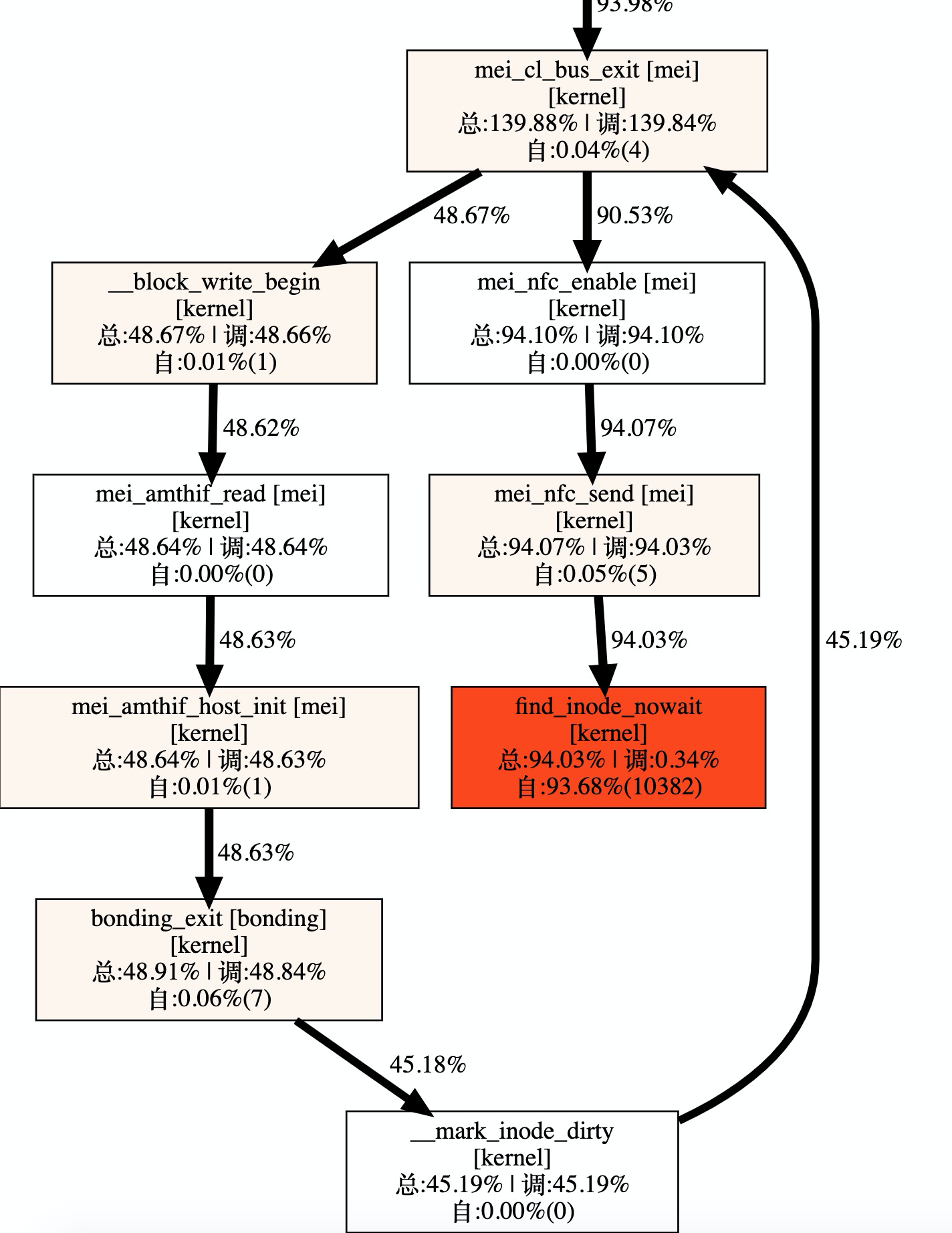

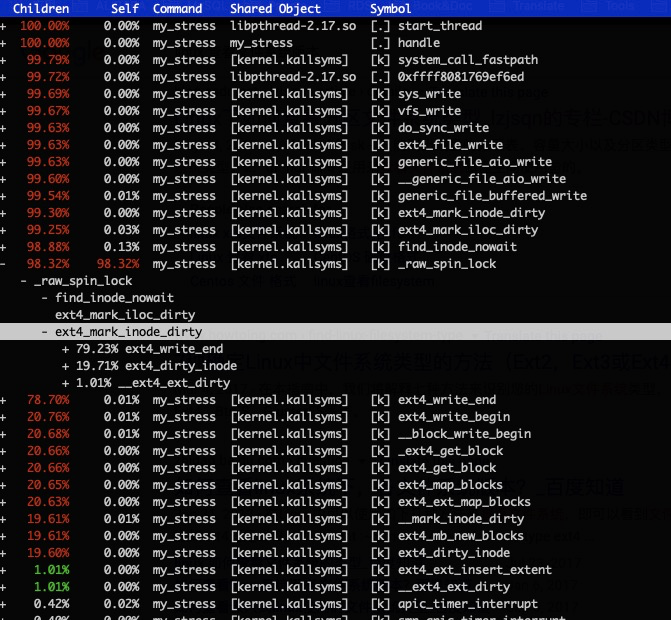

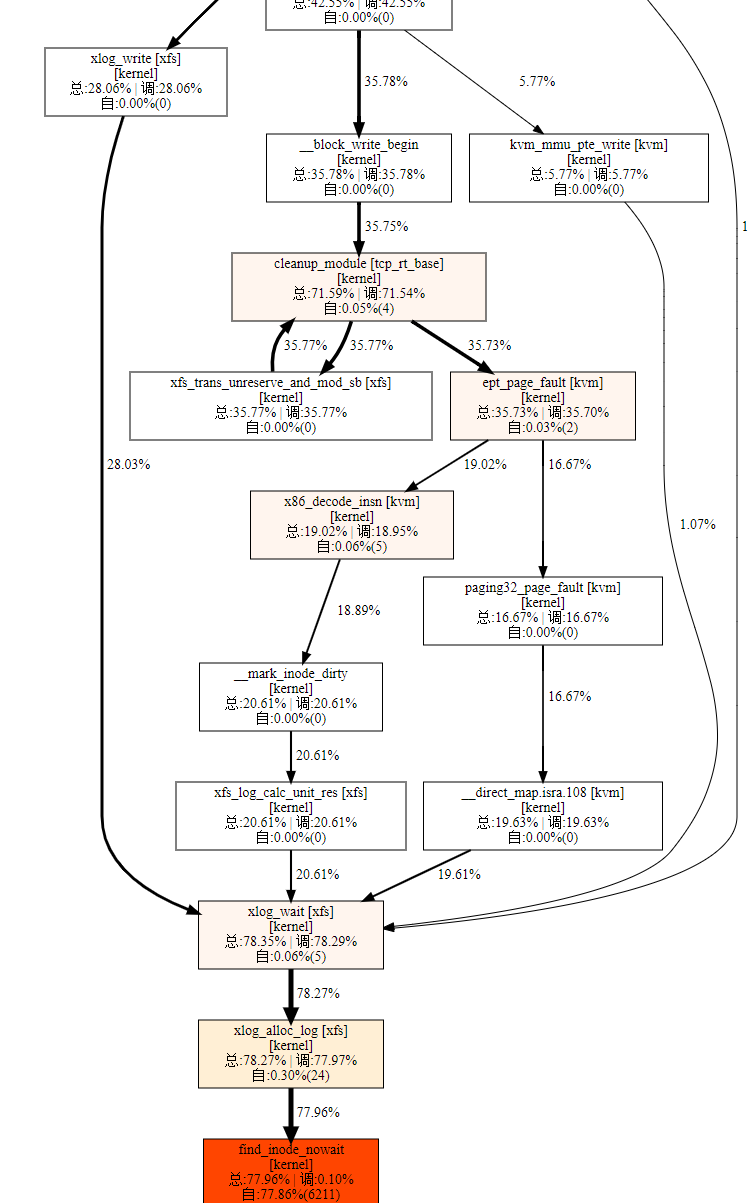

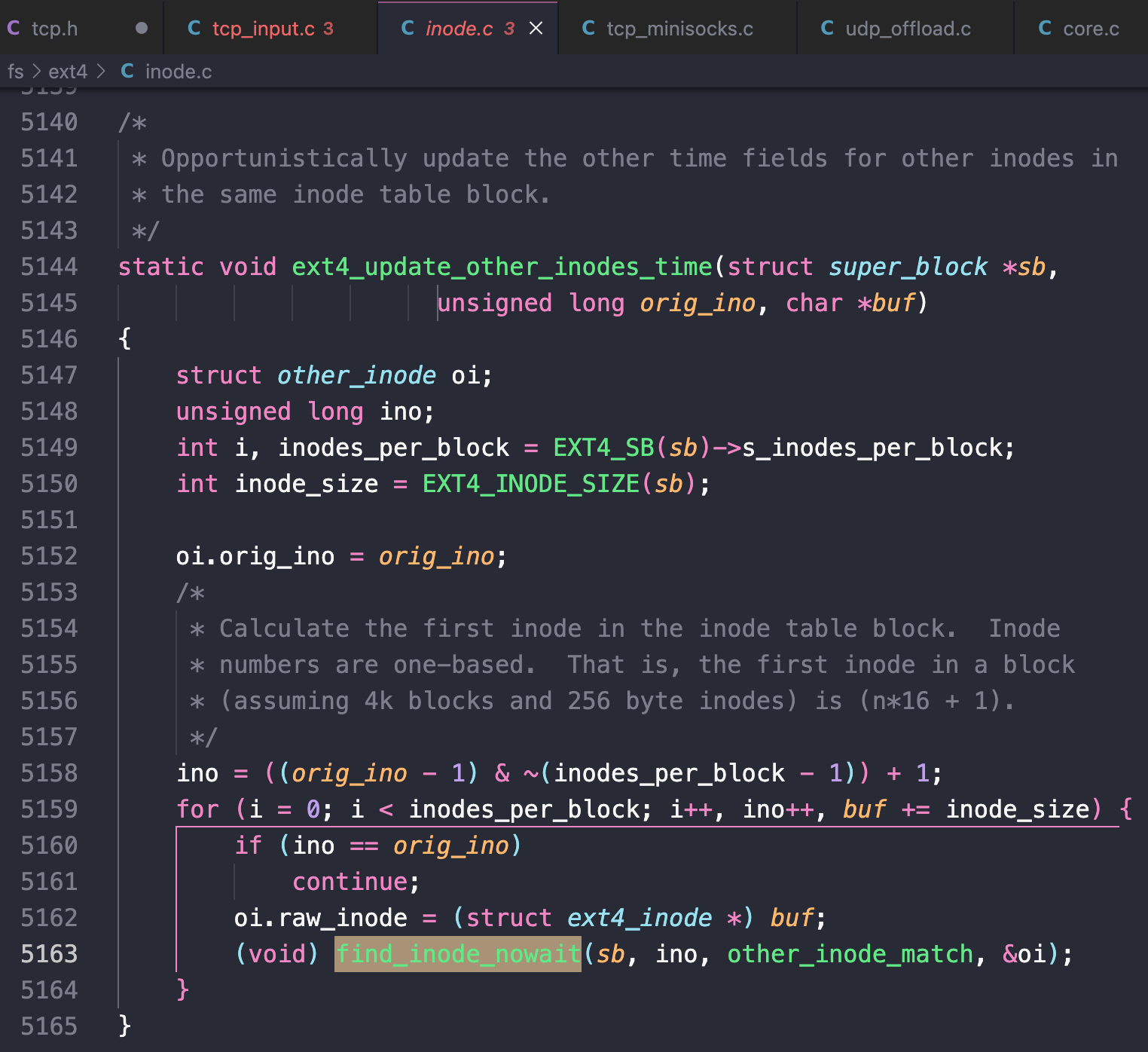

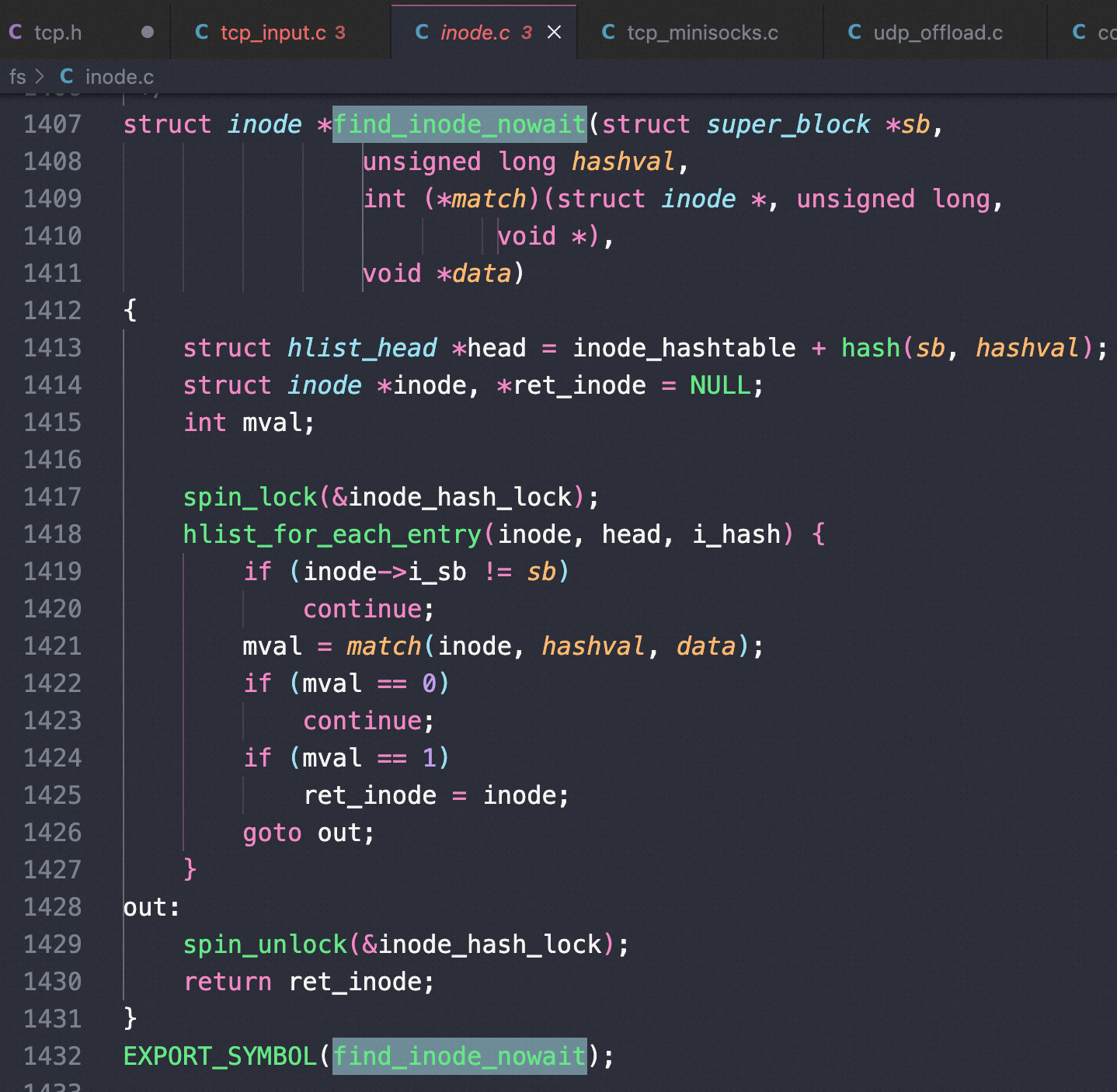

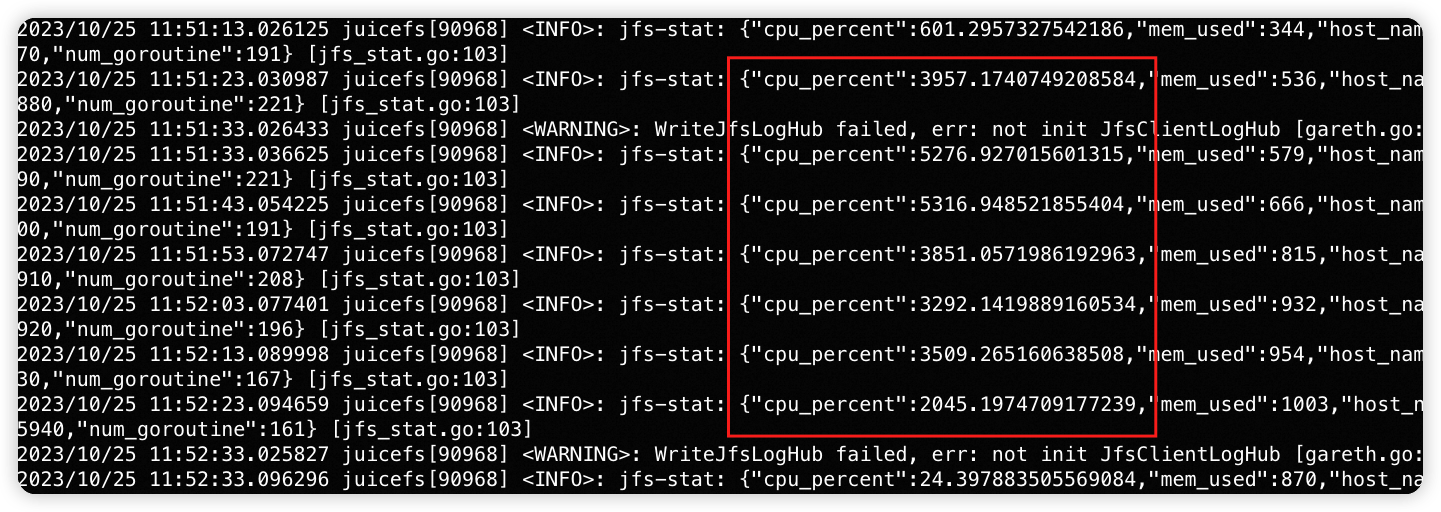

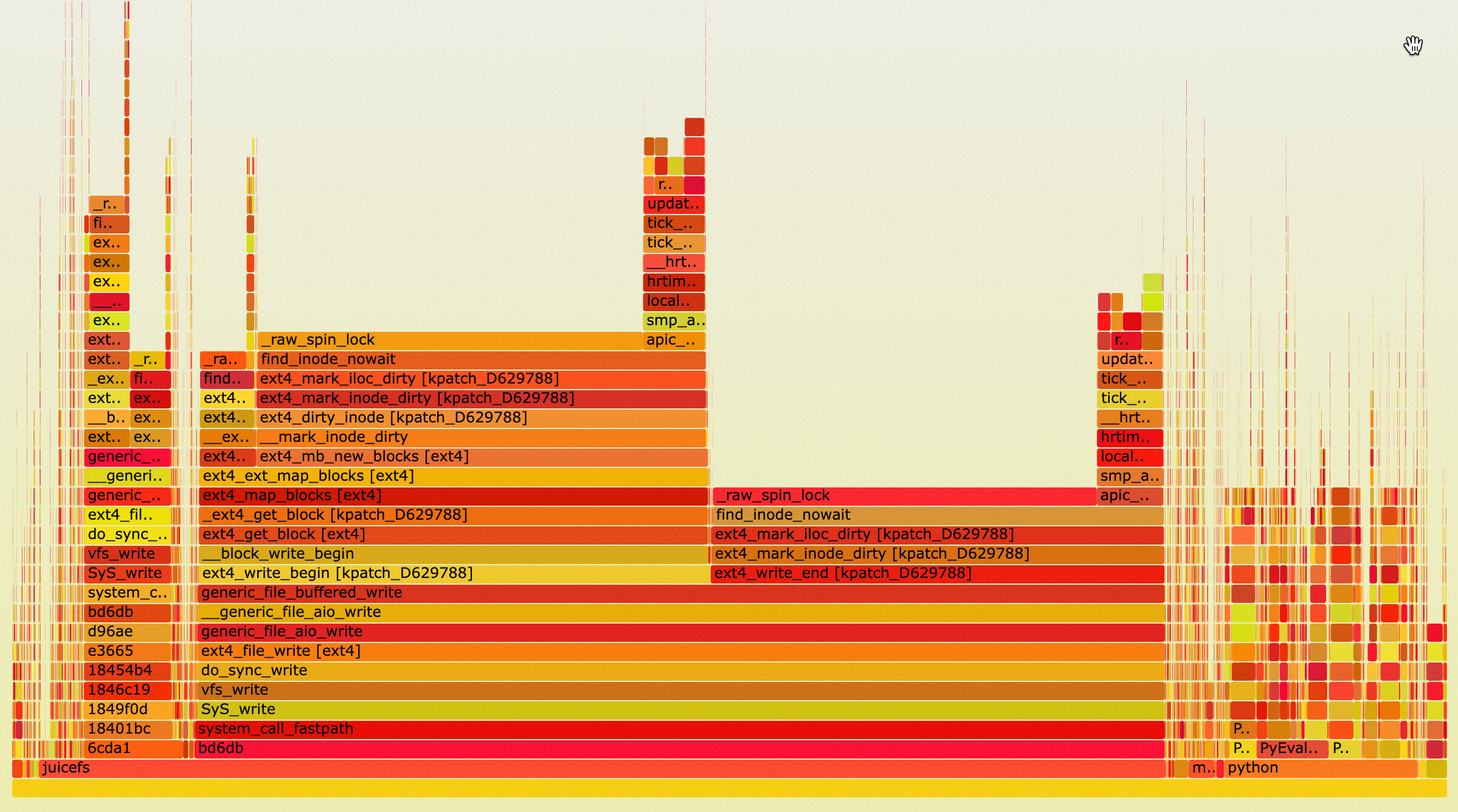

比如在mysql filesort 场景下就可能触发 find_inode_nowait 热点,MySQL filesort 过程中,对文件的操作时序是 create,open,unlink,write,read,close; 而文件系统的 lazytime 选项,在发现 inode 进行修改了之后,会对同一个 inode table 中的 inode 进行修改,导致 file_inode_nowait 函数中,spin lock 的热点。

所以mount时注意不要有 lazytime

如果一个SQL 要创建大量临时表,而 /tmp/ 挂在参数有lazytime的话也会导致同样的问题,如图堆栈:

对应的内核代码:

另外一个应用,也经常因为find_inode_nowait 热点把CPU 爆掉:

lazytime 的问题可以通过代码复现:

1 |

|

主动;工具、生产效率;面向故障、事件

LVM 异常修复

文件系统损坏,是导致系统启动失败比较常见的原因。文件系统损坏,比较常见的原因是分区丢失和文件系统需要手工修复。

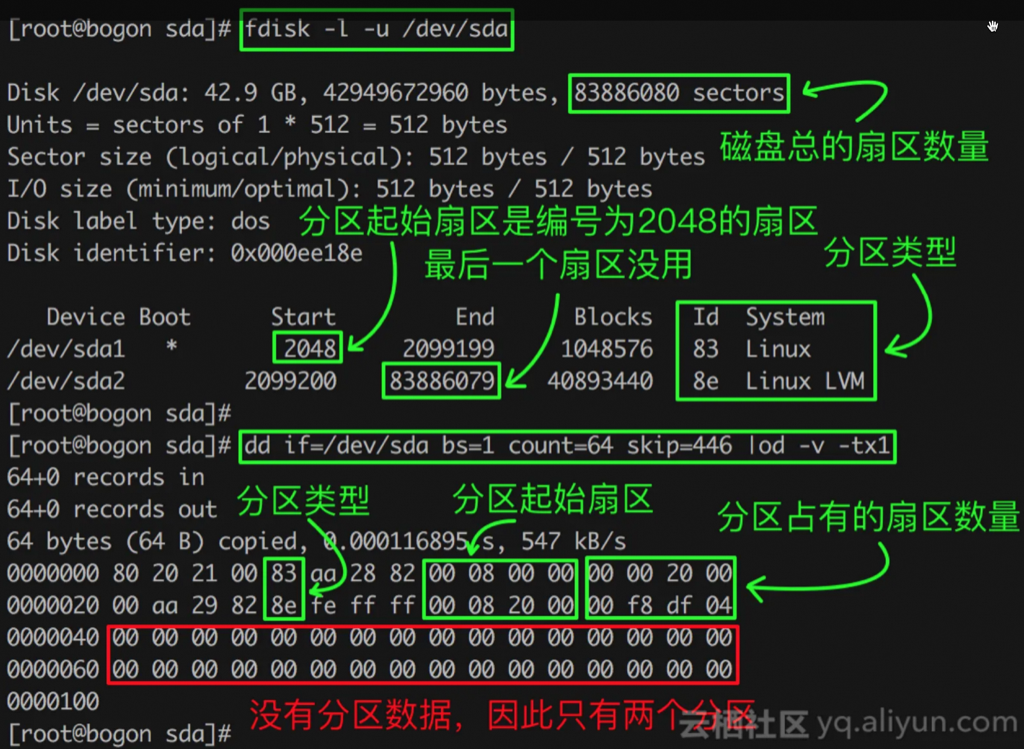

1、分区表丢失,只要重新创建分区表即可。因为分区表信息只涉及变更磁盘上第一个扇区指定位置的内容。所以只要确认有分区情况,在分区表丢失的情况下,重做分区是不会损坏磁盘上的数据的。但是分区起始位置和尺寸需要正确 。起始位置确定后,使用fdisk重新分区即可。所以,问题的关键是如何确定分区的开始位置。

确定分区起始位置:

MBR(Master Boot Record)是指磁盘第一块扇区上的一种数据结构,512字节,磁盘分区数据是MBR的一部分,可以使用通过dd if=/dev/vdc bs=512 count=1 | hexdump -C 以16进制列出扇区0的裸数据:

可以看出磁盘的分区类型ID、分区起始扇区和分区包含扇区数量,通过这几个数值可以确定分区位置。后面LVM可以通过LABELONE计算出起始位置。

参考资料

https://www.tecmint.com/manage-and-create-lvm-parition-using-vgcreate-lvcreate-and-lvextend/

pvcreate error : Can’t open /dev/sdx exclusively. Mounted filesystem?

软RAID配置方法参考这里